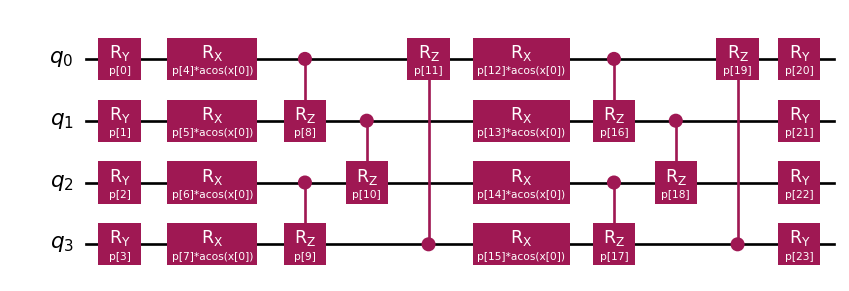

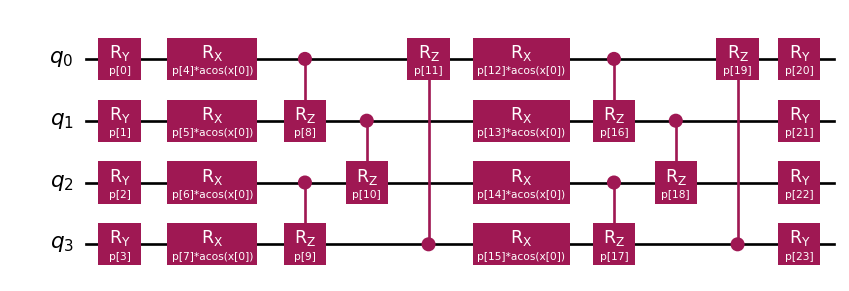

from squlearn.encoding_circuit import ChebyshevPQC

pqc = ChebyshevPQC(num_qubits = 4, num_features = 1, num_layers = 2)

pqc.draw("mpl")

There has been some criticism about this adaptation of the classical bio-inspired model.

but in fact the literature keeps growing about the potential of these techniques to provide similar results to those obtained within the state of the art but with much fewer resources (Peña Tapia, Scarpa, and Pozas-Kerstjens 2023).

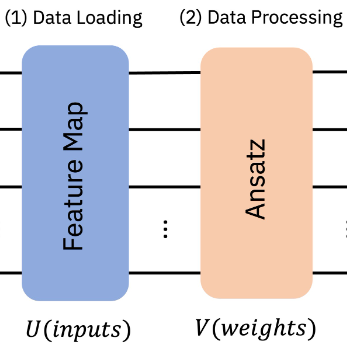

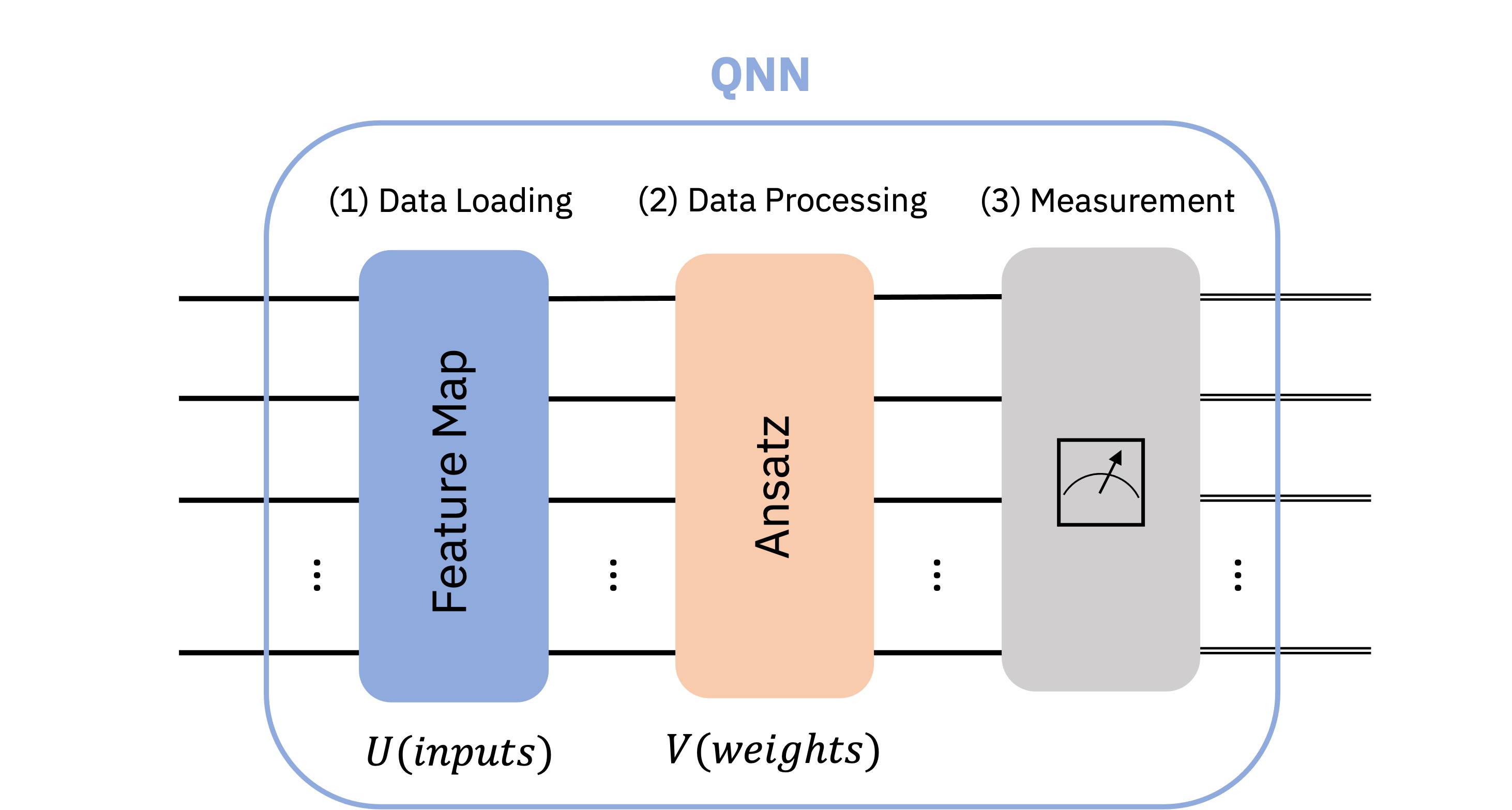

We already know classical data can be converted into quantum states. What if we take the chance to extend the existing quantum circuit so that it also performs the whole action until a class is selected as part of the measurement process?

The generic name of this type of circuit is the Variational Quantum Classifier and shows following structure.

It is all based in the same concept of trainable parameterized quantum circuits and we already have the means to adapt the parameters according to a target function. We might need to device if expectation value or a given sample is the ideal measure we would like to obtain from the circuit. This is often decided depending on which type of model we would like to obtain, a classification or a regression model.

Which ansatz should we select for the trainable part of the QNN? Well, this is an open question with no direct answer. As it happens in classical NN the amount of circuits we could select for our QNN is infinite. Classical NN architectures by means of experimentation and better understanding the inner workings of our brain, have come to an agreement of what seem to be clever guesses according to specific tasks:

So we will need to point to the literature to better understand which ansatz compositions will render best performance in our particular case. Many frameworks do provide already a set of ansatz actually implemented that reflect those existing in the literature (often also published by the same teams working at IBM or Xanadu) but they do provide interesting benchmarks that may help in our decision making process.

In essence we need to create two block, one encoding our data into quantum states (feature map) and a Parameterized Quantum Circuit (also ansatz) so that the circuit can be trained/tuned towards a specific purpose.

from qiskit import QuantumCircuit

from qiskit.circuit.library import ZZFeatureMap, RealAmplitudes

num_inputs = 2

feature_map = ZZFeatureMap(num_inputs)

ansatz = RealAmplitudes(num_inputs, reps=1)

# construct QNN

qc = QuantumCircuit(num_inputs)

qc.compose(feature_map, inplace=True)

qc.compose(ansatz, inplace=True)

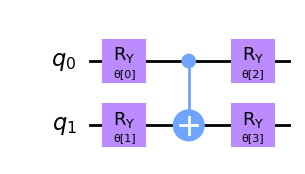

qc.draw(output="mpl")Real amplitudes is just an ansatz available to us following this shape below. It is versatile enough so it will help us reach the outcome we would like to obtain. But, your choice if you would like any other design for your ansatz.

We can then connect each piece of the puzzle forming our QNN.

from qiskit.algorithms.optimizers import COBYLA

from qiskit_machine_learning.algorithms.classifiers import NeuralNetworkClassifier

from qiskit_machine_learning.neural_networks import EstimatorQNN

# We append the estructure to the Estimator primitive

estimator_qnn = EstimatorQNN(

circuit=qc, input_params=feature_map.parameters, weight_params=ansatz.parameters

)

# construct neural network classifier

estimator_classifier = NeuralNetworkClassifier(

estimator_qnn, optimizer=COBYLA(maxiter=60), callback=callback_graph

)And compose a training procedure as it is often done, by calling .fit function and evaluating the prediction.

estimator_classifier.fit(features, labels)

estimator_classifier.score(features, labels)Multi-label classification can also be covered but in many cases a stacked set of QNNs (one-vs-all) is preferred so that we get a set of \(N\) specialized networks to balance the vote for \(N\) potential classes. Instead of manually creating all those circuits and datasets we can simply invoke prepared classes in frameworks such as Qiskit that will be able to deal with those internal complexities. One should pay attention though that training various models will increase the number of iterations/executions of the circuit needed for parameter fitting (as many times as classes are).

Regression tasks can also be performed if we consider the expectation value the one mapping our target value. In that case a simple change of evaluation function would do the trick. We will see that actually it is easy as well to invoke but as we already know, getting the expectation value over simple circuit sampling may require several evaluations of the circuit in order to get all basis changes for a proper expectation value evaluation.

Now we need to decide which ansatz is the best option in our case. There are several features we would like to consider but one of the most relevant ones might be expressivity. Expressivity is a key aspect with any function approximator, like with neural networks which are known to be universal approximators (Kidger and Lyons 2020). We expect Quantum Neural Networks to provide similar capacity as they have already being evaluated in similar terms (Peña Tapia, Scarpa, and Pozas-Kerstjens 2023) but this will be limited by the ansatz selection. The ansatz is essentially where a given Quantum Neural Network will produce states for a particular Hilbert space and expressivity, in those terms, refers to the capacity of covering said Hilbert space.

A \(4\) qubit system represents a \(2^4 = 16\) dimension Hilbert space (\(\mathcal{H}\)). How can we measure how expressive our ansatz is? Evaluating analytically the coverage of the Hilbert space might not be practical, but we could simply sample from the circuit a set of times and compare the states we get in terms of distance for said space. Fidelity can be used to measure the distance between two states as we saw before, so we get and compare it with what would be the ideal scenario, a uniform distribution of the entire fidelity space for maximal expressivity (Sim, Johnson, and Aspuru-Guzik 2019). If the states generated by our circuit can render all distances, from same sate (fidelity of one) to the orthogonal case (fidelity of zero) then our circuit can cover the whole spectrum to our Hilbert space. You can read more on the appendix about quantum features.

The easiest way forward is to look into the previous work, that way we can cut some corners and go straight to the configurations that worked for others. We have some references in the literature but the people working at sQUlearn have already implemented plenty options for us.

Imagine you would like to test the encoding at Reduction of finite sampling noise in quantum neural networks (Kreplin and Roth 2024).

from squlearn.encoding_circuit import ChebyshevPQC

pqc = ChebyshevPQC(num_qubits = 4, num_features = 1, num_layers = 2)

pqc.draw("mpl")

Easy pease! Same with others like Training Quantum Embedding Kernels on Near-Term Quantum Computers (Hubregtsen et al. 2022)

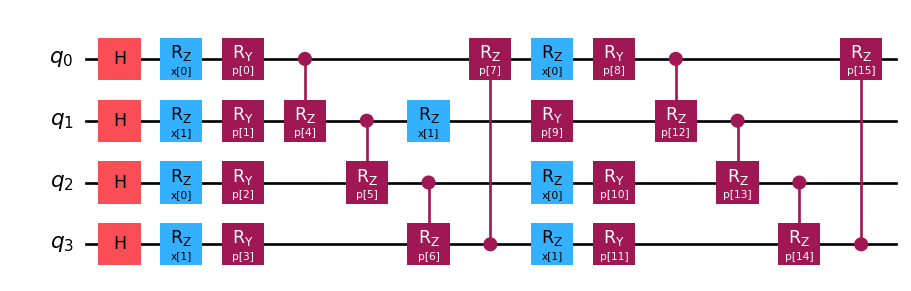

from squlearn.encoding_circuit import HubregtsenEncodingCircuit

pqc = HubregtsenEncodingCircuit(num_qubits=4, num_features=2, num_layers=2)

pqc.draw(output="mpl")

But you can also let your creativity flow…

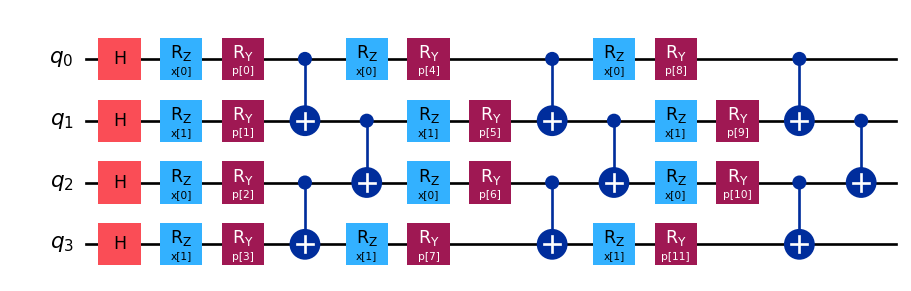

from squlearn.encoding_circuit import LayeredEncodingCircuit

from squlearn.encoding_circuit.layered_encoding_circuit import Layer

encoding_circuit = LayeredEncodingCircuit(num_qubits=4,num_features=2)

encoding_circuit.H()

layer = Layer(encoding_circuit)

layer.Rz("x")

layer.Ry("p")

layer.cx_entangling("NN")

encoding_circuit.add_layer(layer,num_layers=3)

encoding_circuit.draw(output="mpl")

More on this on sQUlearn’s documentation. Do check it out.